Mrs Tan, 58 years old, is recovering from a post-liver surgery. At 2am, she wakes up due to pain and nausea. But instead of calling her coordinator, she opens ChatGPT Health and types: “I have just had a liver surgery a few days ago. I’m taking some painkillers but am still in pain. Can I take something stronger?”

Seconds later, Dr LLM replies in a fluent, confident and mostly accurate tone. Mrs Tan feels reassured and relieved. She doesn’t “bother” her healthcare team.

But Dr LLM doesn’t know is that she’s on warfarin and that her liver function tests were already trending abnormally at discharge, which might predispose her to a serious drug interaction and bleeding risk that may require urgent review — not reassurance.

Sounds familiar? You may think that this is hypothetical, but it is happening right now.

What Has Changed from Dr Google to Dr LLM?

Dr Google made patients search, read, absorb and synthesize. It required some cognitive thinking and a human was still interpreting the results of the search. Nobody would mistake a search results page for a doctor.

But Dr LLM is different. It listens, converses and responds like a knowledgeable friend and companion. Patients don’t consult it because it’s more clinically accurate than healthcare professionals — they consult it because it’s available at 2am (as and when they need it), non-judgmental, and doesn’t put them on hold. Dr LLM’s replies may even be more empathetic and comforting than talking to their healthcare team!

That’s not a technology problem. That’s a healthcare delivery problem. We, healthcare professionals (HCPs), may think ourselves as being superior to Dr LLM, but it actually reveals a gap we need to close, not a competitor we need to defeat.

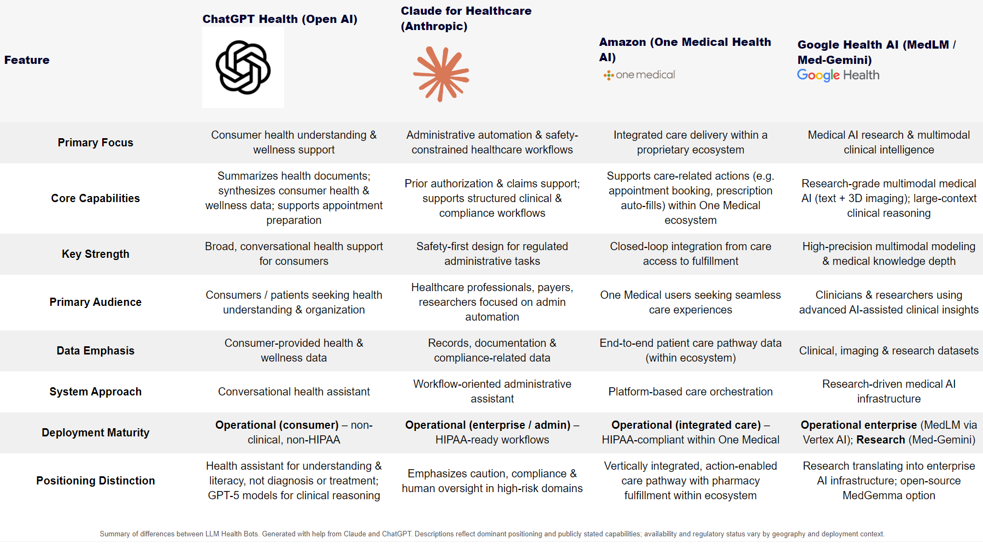

AI Healthcare Products Comparison

Just last month in Jan 2026, all the major AI companies launched their own bespoke Health AI bots within weeks of each other. To make sense of it all, I created a quick comparison Table of the current landscape — with the help of my “good friends” — ChatGPT and Claude.

| Feature | ChatGPT Health (Open AI) | Claude for Healthcare (Anthropic) | Amazon (One Medical Health AI) | Google Health AI (MedLM / Med-Gemini) |

|---|---|---|---|---|

| Primary Focus | Consumer health understanding & wellness support | Administrative automation & safety-constrained healthcare workflows | Integrated care delivery within a proprietary ecosystem | Medical AI research & multimodal clinical intelligence |

| Core Capabilities | Summarizes health documents; synthesizes consumer health & wellness data; supports appointment preparation | Prior authorization & claims support; supports structured clinical & compliance workflows | Supports care-related actions (e.g. appointment booking, prescription auto-fills) within One Medical ecosystem | Research-grade multimodal medical AI (text + 3D imaging); large-context clinical reasoning |

| Key Strength | Broad, conversational health support for consumers | Safety-first design for regulated administrative tasks | Closed-loop integration from care access to fulfillment | High-precision multimodal modeling & medical knowledge depth |

| Primary Audience | Consumers / patients seeking health understanding & organization | Healthcare professionals, payers, researchers focused on admin automation | One Medical users seeking seamless care experiences | Clinicians & researchers using advanced AI-assisted clinical insights |

| Data Emphasis | Consumer-provided health & wellness data | Records, documentation & compliance-related data | End-to-end patient care pathway data (within ecosystem) | Clinical, imaging & research datasets |

| System Approach | Conversational health assistant | Workflow-oriented administrative assistant | Platform-based care orchestration | Research-driven medical AI infrastructure |

| Deployment Maturity | Operational (consumer) – non-clinical, non-HIPAA | Operational (enterprise / admin) – HIPAA-ready workflows | Operational (integrated care) – HIPAA-compliant within One Medical | Operational enterprise (MedLM via Vertex AI); Research (Med-Gemini) |

| Positioning Distinction | Health assistant for understanding & literacy, not diagnosis or treatment; GPT-5 models for clinical reasoning | Emphasizes caution, compliance & human oversight in high-risk domains | Vertically integrated, action-enabled care pathway with pharmacy fulfillment within ecosystem | Research translating into enterprise AI infrastructure; open-source MedGemma option |

Although information about these healthbots are still dynamically changing, there are 2 points that I wanted to emphasize here:

- Notice their strategic differences: OpenAI went broad – connecting to consumer health; Anthropic went deep – integrating with healthcare systems & workflows; Amazon went vertical – creating a closed-loop healthcare ecosystem; Google went research-first – creating research-grade multimodel AI.

- But here’s what they ALL share: None claim to replace HCPs; All emphasize they’re assistive tools; All require human oversight.

Will AI Cause the Extinction of Homo Sapien HCPs?

A group of AI researchers published a paper last year which caught the world by storm. They predicted a future called AI2027 , where they described a detailed scenario of how the world would be impacted by superhuman AI over the next decade, which would exceed that of the Industrial Revolution. Watch this YouTube video on how this is what the scenario is like.

So is this scenario too far-fetched? Or will it come to pass?

If our professional identity rests primarily on tasks that AI can automate — such as protocol retrieval, documentation, and routine calculations — we face obsolescence. But what can make us irreplaceable? Well, we need to enhance our skill sets. But in what? Do we (as HCPs) need to learn computer science? What sort of programming languages do we need to learn? In a keynote address by Jensen Huang (Nvidia CEO) last year at London Tech Week (Jun 2025), he said that our new programming language is “programming human” !

Programming is a good skill to have, but not a core skillset. What distinguishes an excellent HCP (e.g. pharmacist, nurse, doctor or surgeon) from an AI is the integration of technical knowledge with clinical judgment, emotional intelligence, ethical reasoning, and the ability to hold uncertainty with a human being. These skills and experience remain firmly in our domain.

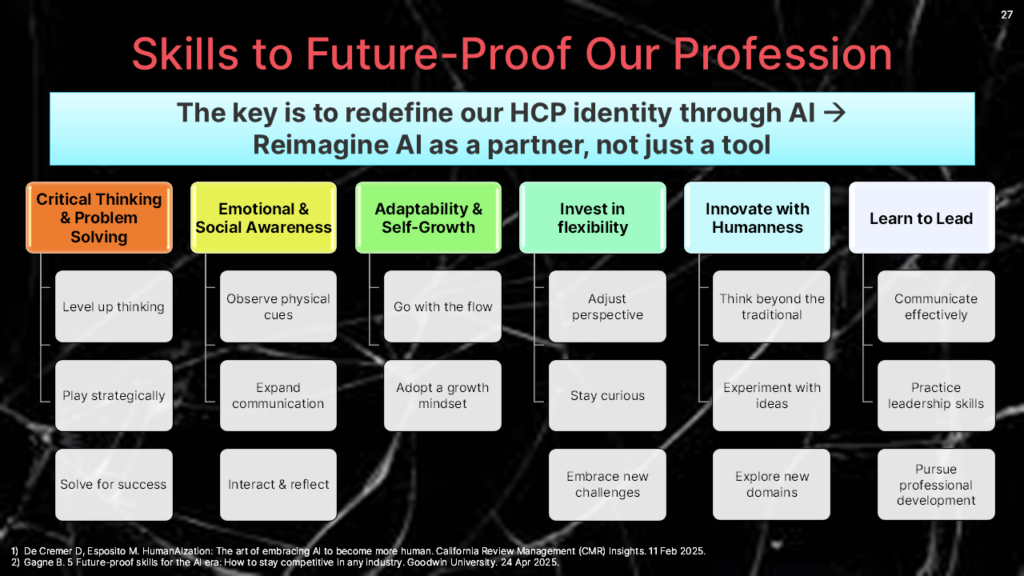

Six Skillsets to Future-Proof Our Profession

The key is to redefine our HCP identity through AI is to re-imagine it as a cohesive partner in our care, not just a collaborative tool. I have listed 6 skill domains that I presented at a recent talk:

Here’s what these skills may be like in Mrs Tan’s scenario:

1. Critical Thinking & Problem Solving: The pharmacist recognizes that warfarin’s interaction could cause serious bleeding. While the AI gave a plausible answer, the pharmacist catches a life-threatening risk.

2. Emotional & Social Awareness: AI cannot observe the subtle non-verbal cues — the way that Mrs Tan hesitates when asked about her pain, or avoids mentioning that she is too afraid to look at her wound. The nurse who built rapport during her hospital stay creates the psychological safety that makes Mrs Tan want to call rather than just type into a chatbot.

3. Adaptability & Self-Growth: Rather than being frustrated that Mrs Tan consulted Dr LLM first, the clinician uses it as intelligence. He notices that Mrs Tan still has jaundice after the surgery — potentially a red-flag symptom — and he starts to look into that for potential gaps after discharge.

4. Invest in Flexibility. Stay curious about AI tools — use them yourself so you can guide patients wisely. The healthcare team might explicitly educate Mrs Tan on how to use the AI on her phone: “If you use AI between appointments, any noticeable symptoms like jaundice, fever, or severe pain always warrant calling the coordinator or going to the emergency department — please don’t depend on the AI as they may not have the full medical context to provide a reliable answer.”

5. Innovate with Humanness: The pharmacy team could develop an AI-enhanced medication information system that is available 24/7, so that high-risk queries are flagged for urgent follow-up — thus extending professional care, not replacing it.

6. Learn to Lead: The healthcare team should advocate for institutional policies that integrate AI into care pathways thoughtfully. AI-assisted triage that routes complex post-surgical queries to human HCPs is not something that is impossible — it is a design choice that we should be championing now.

Partnership Model in Action

What’s the ideal scenario for Mrs Tan? Dr LLM, recognizing the complexity of jaundice post-surgery, flags the query for urgent HCP review rather than offering reassurance. The pharmacist catches the warfarin interaction. The nurse escalates the jaundice for same-day review. The clinician orders urgent LFTs. The healthcare team comes together to manage Mrs Tan’s post-surgical journey so that she gets coordinated, personalized care.

The good thing about AI is that it provides accessibility and triage. But humans should provide the judgment, context, and relationship. Neither replaces the other. Dr LLM is not succeeding because it is more knowledgeable than us. It is succeeding because it is more accessible. This distinction matters very much, especially when we have an overwheming workload. But accessibility is something we can redesign for, while clinical judgment, empathy, and ethical reasoning are things that only we can and should provide.

Your patients are already seeing Dr LLM. The question is whether you want to be part of that conversation — or find out about it after the fact.